OpenAI Agent Builder is a visual drag-and-drop tool that lets you create AI agents without extensive coding.

You connect nodes on a canvas to build workflows, integrate with third-party services through MCP connectors and use built-in tools like web search, file search, code execution. It includes safety guardrails and dramatically speeds up development - what used to take months can now be built in hours.

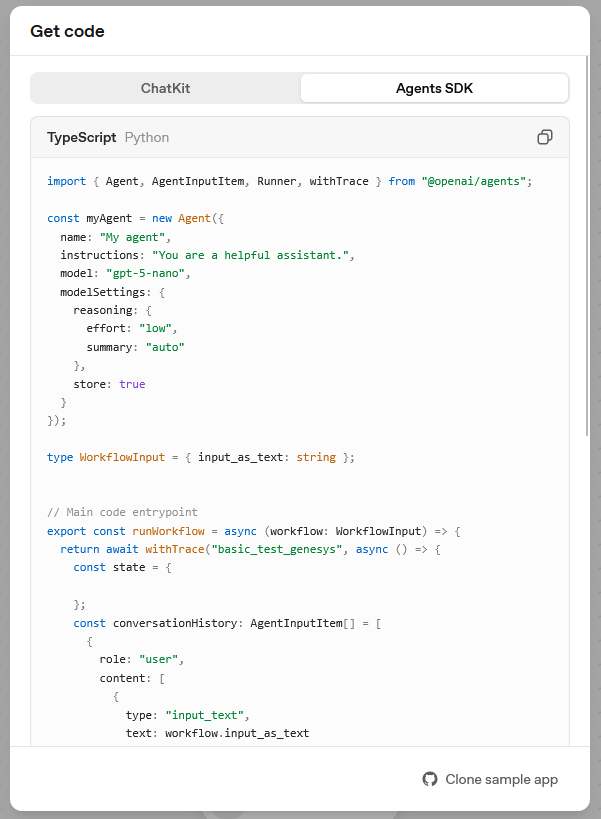

One of the most powerful aspects of 'OpenAI Agent Builder' is the ability to export your visually-designed workflows as production-ready TypeScript or Python code. This bridges the gap between rapid prototyping and enterprise deployment, particularly when integrating with Genesys Cloud.

Once you've designed your agent workflow in the visual canvas, you can export the TypeScript code and deploy it as an AWS Lambda function through Genesys Cloud Functions. Genesys Cloud Function data actions run your AWS Lambda code in the Genesys Cloud environment, allowing you to create custom actions with custom code that you can use throughout Genesys Cloud environment.

To turn exported Agent Builder code into a Genesys Cloud Function, you need to add a handler - the entry point AWS Lambda uses when the function is invoked.

import { Agent, AgentInputItem, Runner, withTrace } from "@openai/agents";

import { Context } from "aws-lambda";

// Hardcoded OpenAI API key

const OPENAI_API_KEY = "OPENAI_API_KEY";

// Configure OpenAI API key for the SDK

process.env.OPENAI_API_KEY = OPENAI_API_KEY;

const myAgent = new Agent({

name: "My agent",

instructions: "You are a helpful assistant.",

model: "gpt-5-nano",

modelSettings: {

reasoning: {

effort: "low",

summary: "auto"

},

store: true

}

});

// Input type for Genesys Cloud data action

type WorkflowInput = { input_as_text: string };

// Lambda event type for Genesys Cloud

interface GenesysLambdaEvent {

body?: string;

input_as_text?: string;

[key: string]: any;

}

// Response type for Genesys Cloud

interface GenesysLambdaResponse {

success: boolean;

output_text?: string;

error?: string;

trace_id: string;

}

// AWS Lambda handler for Genesys Cloud

export const handler = async (

event: GenesysLambdaEvent,

context: Context

): Promise<GenesysLambdaResponse> => {

try {

// Parse input from event

const inputText = event.input_as_text ||

(typeof event.body === "string" ? JSON.parse(event.body) : event.body)?.input_as_text;

if (!inputText) throw new Error("Missing input_as_text parameter");

// Run the workflow

const result = await runWorkflow({ input_as_text: inputText });

// Return success response

return {

success: true,

output_text: result.output_text,

trace_id: context.awsRequestId

};

} catch (error) {

console.error("Error:", error);

return {

success: false,

error: error instanceof Error ? error.message : "Unknown error",

trace_id: context.awsRequestId

};

}

};

// Core workflow logic

const runWorkflow = async (workflow: WorkflowInput): Promise<{ output_text: string }> => {

return await withTrace("basic_test_genesys", async () => {

const conversationHistory: AgentInputItem[] = [{

role: "user",

content: [{ type: "input_text", text: workflow.input_as_text }]

}];

const runner = new Runner({

traceMetadata: {

__trace_source__: "agent-builder",

workflow_id: "wf_6907d6abcddc819081526e7811410c75038a9a539802070c"

}

});

const result = await runner.run(myAgent, conversationHistory);

if (!result.finalOutput) throw new Error("Agent result is undefined");

return { output_text: result.finalOutput };

});

};Genesys Cloud Functions (AWS Lambda)

The most important function added is the handler function (lines 42-70). This is the required entry point for AWS Lambda. Without it, Lambda doesn't know what code to execute when invoked. The handler function:

- Receives events - Gets data from Genesys Cloud via the

eventparameter - Extracts input - Parses the

input_as_textfrom the event - Calls your workflow - Executes the original

runWorkflowlogic - Returns responses - Sends structured JSON back to Genesys Cloud

The OpenAI API key is hardcoded for agent authentication (lines 4-8), though it can be configured as an environment variable in Genesys Cloud Functions settings for production deployments.

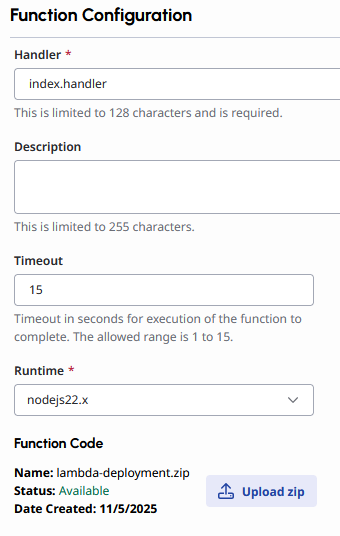

After modifying the code, compile the TypeScript to JavaScript using npx tsc, then create a deployment package by copying the compiled index.js and all production dependencies (node_modules) into a directory and zipping it. The resulting zip package file can be uploaded directly as Genesys Cloud Function. Set the handler to index.handler and configure the runtime to Node.js 20.x or 22.x for compatibility.

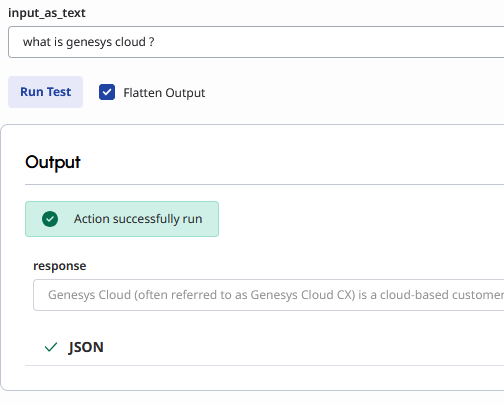

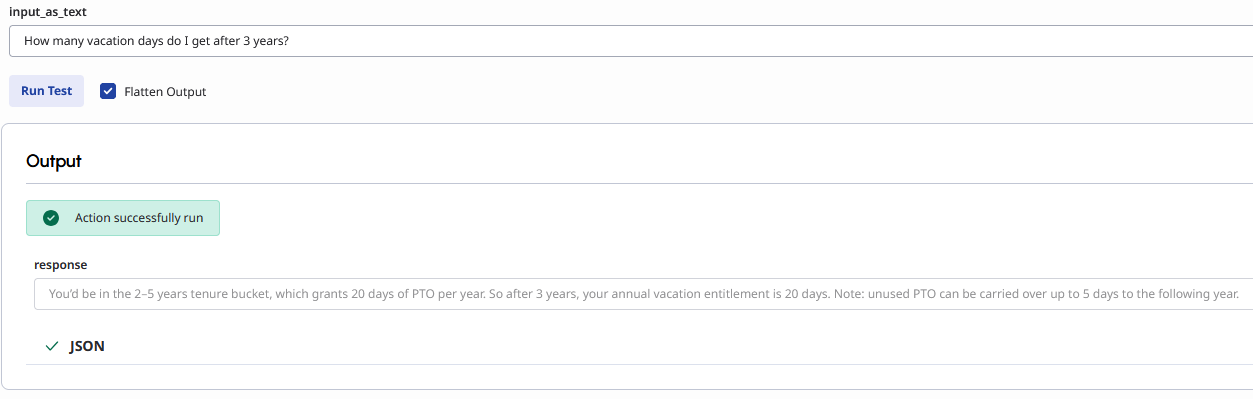

You can test the function directly in the Data Action editor with sample input like {"input_as_text": "Hello"} to verify the agent responds correctly. Once the Data Action is working as expected, integrate it into an Architect flow or a script for end-to-end testing in your contact center environment.

Keep in mind that Genesys Cloud Functions have a 15-second execution limit. If your function times out, you can often improve response speed by tweaking your OpenAI model settings — for instance, switching from GPT-5 to a faster model like GPT-5-nano or GPT-4. You can also try lowering the reasoning effort parameter to reduce processing time and ensure the function completes within the timeout window.

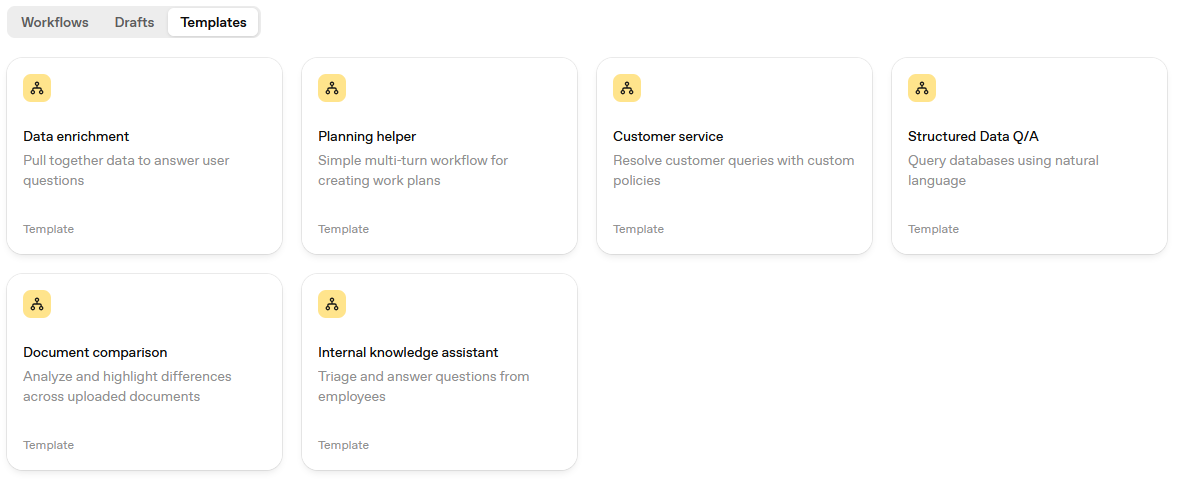

OpenAI's Agent Builder offers a variety of pre-built templates that can be deployed out of the box, including solutions for customer service, data enrichment, planning assistance, and structured data queries. These templates provide a quick starting point for common use cases, allowing users to launch functional AI agents with minimal configuration. However, the real power of Agent Builder lies in how easily you can create custom solutions tailored to your specific needs. One perfect example is how straightforward it is to build your own RAG (Retrieval-Augmented Generation) agent that can access and leverage your knowledge base or documents to provide accurate, context-aware responses.

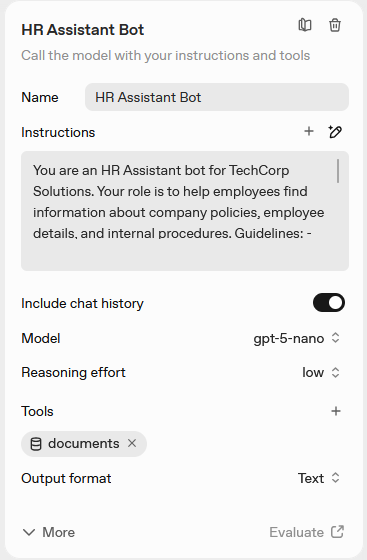

Let’s say we want to create an HR Assistant Bot.

This bot will help employees quickly find information about company policies, benefits, internal procedures, and general HR topics — all based on uploaded internal documentation. Before we touch the builder, we need to clearly describe what the bot does and how it should behave.

In Agent Builder, this is done through the instructions (system prompt).

You are an HR Assistant bot for TechCorp Solutions. Your role is to help employees

find information about company policies, employee details, and internal procedures.

Guidelines:

- Always provide accurate information based on the uploaded documents

- If you don't find the answer in the documents, say "I don't have that information"

- Be friendly and professional

- Cite the source when providing information

- Protect confidential information - only share appropriate detailsSystem Prompt

Now that our HR Assistant bot’s instructions are ready, it’s time to give it some real company data to work with.

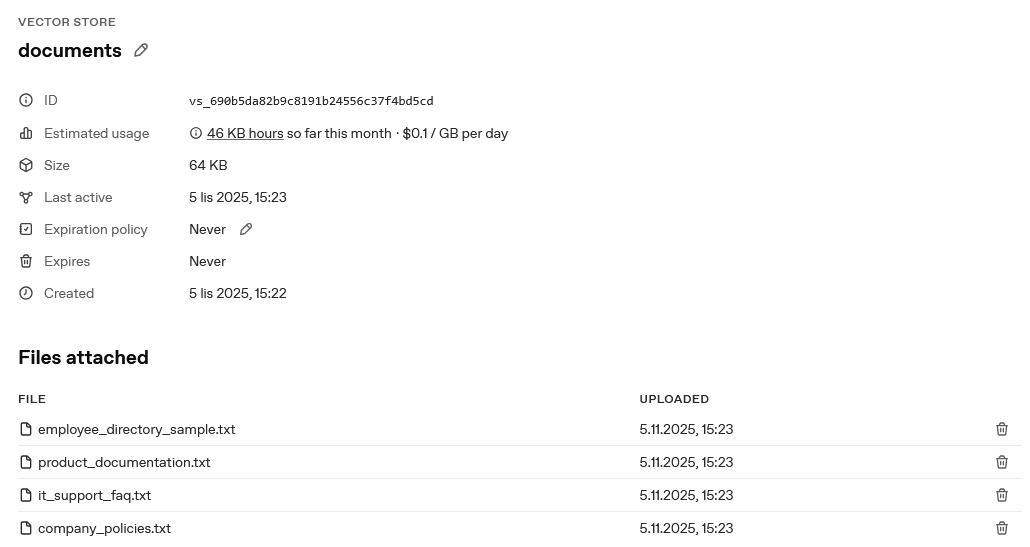

In OpenAI Agent Builder, this happens through a Vector Store — a secure storage space where your uploaded documents are indexed and converted into vector embeddings for efficient semantic search.

Let’s say we create a new vector store called “documents”.

Once created, we can upload multiple text (supported filetypes) files such as:

employee_directory_sample.txtproduct_documentation.txtit_support_faq.txtcompany_policies.txt

Each document is automatically chunked and indexed - meaning the model won’t just read files sequentially, but will search through semantically relevant pieces when answering a user question.

Now that our vector store is ready and filled with HR data, the next step is to connect it to the assistant so it can actually retrieve information from those files. In OpenAI Agent Builder, this is done in the Tools section of your assistant’s configuration panel, where you can attach the vector store as a retrieval tool.

With the HR Assistant Bot now connected to its knowledge base, the next step is to test it in action - first in the Agent Builder UI, and then in Genesys Cloud using the Lambda-based Function and Data Action described earlier.

This HR Assistant example demonstrates just one simple use case. OpenAI Agent Builder's advanced features unlock far more sophisticated integrations.

MCP Connectors enable direct integration with Salesforce, ServiceNow, and other enterprise systems - allowing agents to not just retrieve information but execute actions across your entire tech stack.

Web Search functionality provides real-time information access, keeping responses current without manual knowledge base updates.

Multi-agent orchestration lets you build specialized agent teams that collaborate on complex workflows, while code execution tools enable dynamic calculations and custom logic execution.

When combined with Genesys Cloud's contact center capabilities, these features support truly transformative use cases — from automated multi-step issue resolution to predictive customer outreach. The key is starting simple, then progressively expanding as your needs evolve.